Insider Brief

- A new study by Kipu Quantum and IBM demonstrates that a tailored quantum algorithm running on IBM’s 156-qubit processors can solve certain hard optimization problems faster than classical solvers like CPLEX and simulated annealing.

- The quantum method, called bias-field digitized counterdiabatic quantum optimization (BF-DCQO), achieved comparable or better solutions in seconds, while classical methods required tens of seconds or more.

- The advantage was observed across 250 specially designed problem instances, suggesting that near-term quantum hardware can offer measurable runtime benefits without error correction.

A new study by researchers at Kipu Quantum, in partnership with IBM, demonstrates that quantum computers can now outperform top-tier classical optimization software in specific real-world scenarios. The researchers report that this shows that, at least for specific tasks, quantum systems are already faster — with speed measured in real runtimes, not speculation.

In this study, the team used IBM’s 156-qubit quantum processors and a specially tailored quantum algorithm to show that their quantum system achieved solutions to hard optimization problems in seconds — faster than IBM’s own CPLEX software and the widely used simulated annealing approach running on powerful classical hardware.

If it holds, the finding, published in a preprint on arXiv, could mark one of the clearest demonstrations yet of a runtime quantum advantage in a practical setting, according to the team.

They write: “This study illustrates the practical utility of current quantum hardware without the need for quantum error correction. It also provides experimental evidence for heuristic digital quantum optimization speedups, pointing toward the possibility of quantum advantage. Ultimately, our results show that contemporary quantum processors, when paired with advanced algorithms like BF-DCQO, could be capable of delivering solutions to industrial-scale optimization problems.”

Fastest to a Good Answer

The study focuses on a class of problems known as higher-order unconstrained binary optimization (HUBO), which model real-world tasks like portfolio selection, network routing, or molecule design. These problems are computationally intensive because the number of possible solutions grows exponentially with problem size. On paper, those are exactly the types of problems that most quantum theorists believe quantum computers, once robust enough, would excel at solving.

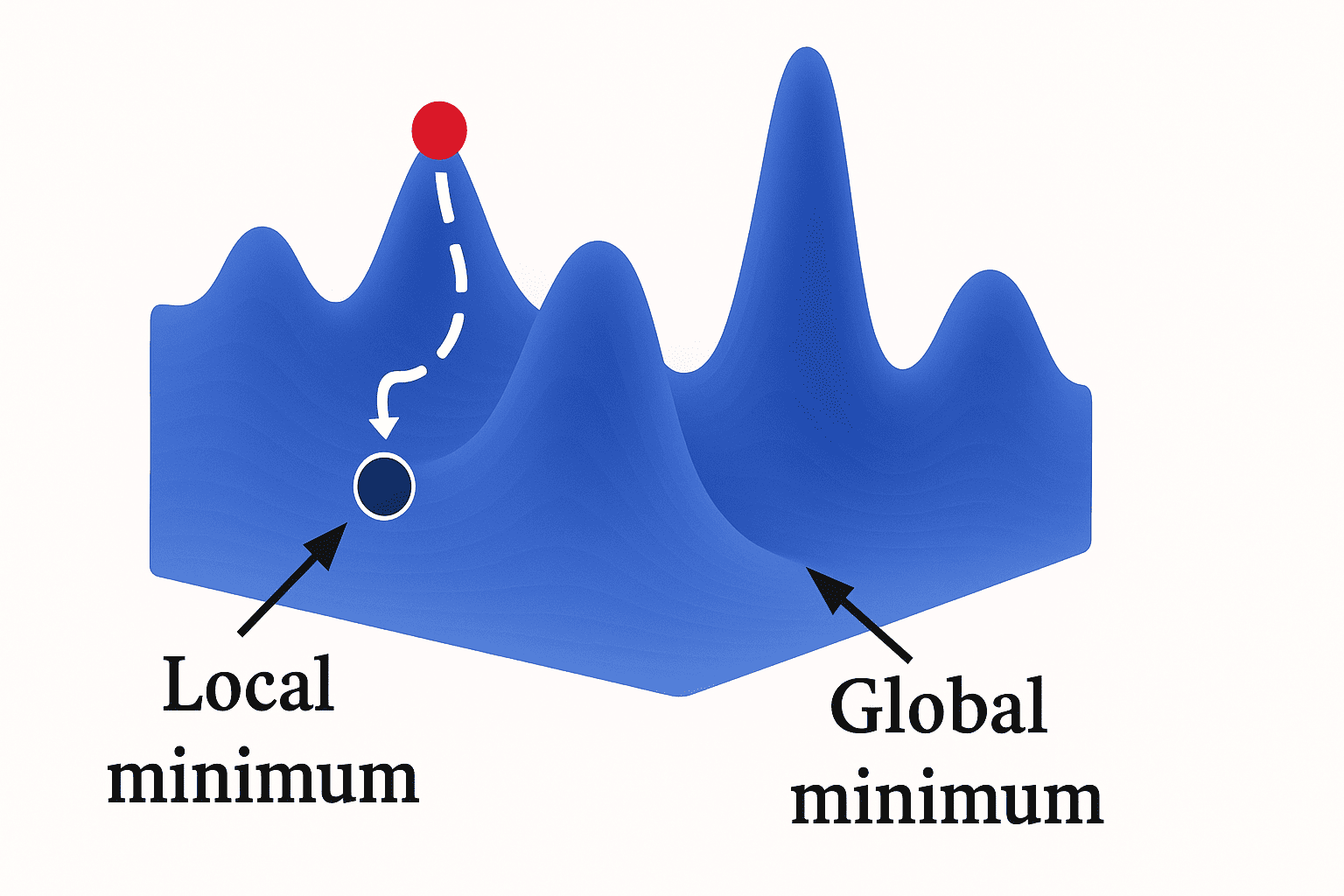

The researchers evaluated how well different solvers — both classical and quantum — could find approximate solutions to these HUBO problems. The quantum system used a technique called bias-field digitized counterdiabatic quantum optimization (BF-DCQO). The method builds on known quantum strategies by evolving a quantum system under special guiding fields that help it stay on track toward low-energy (i.e., optimal) states. Another way to put it: It’s like giving the quantum system a map and a push, helping it avoid getting stuck in shallow valleys and instead find the most efficient path to the best solution.

In tests involving up to 156 variables, which is a relatively high count in quantum experiments, the quantum solver consistently outperformed classical methods in time-to-approximate solutions. For a representative problem with 156 variables, BF-DCQO reached a solution with a high approximation ratio, which is referred to as a measure of quality, in just half a second. CPLEX took 30 to about 50 seconds to match that same solution quality, even with 10 CPU threads running in parallel, according to the study.

The researchers further confirmed this advantage across a suite of 250 randomly generated hard instances, using distributions specifically selected to challenge classical algorithms. BF-DCQO delivered results up to 80 times faster than CPLEX in some tests and over three times faster than simulated annealing in others.

Exploiting System Limits, Not Replacing Them

Unlike many quantum advantage claims that rely on large, error-corrected systems or hypothetical architectures, the Kipu Quantum team operated within the constraints of noisy intermediate-scale quantum (NISQ) hardware. They ran all experiments on IBM’s Marrakesh and Kingston quantum processors, which have limited qubit connectivity and finite coherence times. These can be real barriers to building useful quantum systems.

To work around these limitations, the team carefully designed problem instances that could be embedded into IBM’s heavy-hexagonal lattice — the qubit layout used in Heron chips — with just a single “swap layer” to rearrange connections. They also used heavy-tailed distributions like Cauchy and Pareto to create more rugged optimization landscapes, which tend to be harder for classical methods but still accessible to quantum algorithms that can tunnel through local minima.

It is probably important to note that the researchers didn’t just rely on the quantum component and that the hybrid approach was essential in securing the quantum edge. Their BF-DCQO pipeline includes classical preprocessing and postprocessing, such as initializing the quantum system with good guesses from fast simulated annealing runs and cleaning up final results with simple local searches.

Why It Works: Counterdiabatic Evolution and CVaR Filtering

At the heart of the BF-DCQO algorithm is an adaptation of counterdiabatic driving, a physics-inspired strategy where an extra term is added to the Hamiltonian — the system’s energy function — to suppress unwanted transitions. This helps the quantum system evolve faster and more accurately toward its lowest energy configuration.

The algorithm further uses a digitized version of this evolution, broken into layers of quantum gates, according to the study. After each layer, the system is measured, and a Conditional Value-at-Risk (CVaR) filtering method is used to retain only the lowest-energy outcomes. If “value” and “risk” sound like finance terms, that’s no accident — the technique comes from financial risk management, where it helps, for example, investors prepare for the worst 5% of market outcomes. In the quantum setting, the idea is flipped: instead of avoiding the worst cases, the algorithm zeroes in on the best 5% of measurement results — those closest to an optimal solution. These selected bitstrings are then used to update the guiding fields for the next iteration, gradually refining the solution.

Because this process doesn’t rely on error correction, it is well suited to today’s NISQ devices. And because the algorithm uses only shallow circuits with mostly native operations like single-qubit rotations and two- or three-body interactions, it can fit within the short coherence windows of real hardware.

Limitations and Scalability

The team acknowledges that their results hinge on specially constructed problem instances. The quantum system doesn’t yet offer a universal advantage across all types of problems. Moreover, as with all NISQ approaches, hardware noise and limited circuit depth remain constraints. The experiments were limited to instances with only one swap layer to avoid exceeding these limits.

But even with those restrictions, the researchers show that as problem size increases, the gap in performance between quantum and classical methods widens, a promising trend that suggests future hardware improvements will extend the quantum advantage further. In fact, with continuing improvements in qubit coherence times, connectivity, and gate fidelity, the study suggests that quantum runtime advantages will grow from seconds to orders of magnitude, potentially marking a turning point for real-world quantum computing, the researchers suggest.

The team writes in the paper: “Our analysis reveals that the performance improvement becomes increasingly evident as the system size grows. Given the rapid progress in quantum hardware, we expect that this improvement will become even more pronounced, potentially leading to a quantum advantage of several orders of magnitude.”

They also explored how tuning the parameters of the problem generation process (specifically the shape parameter of the Pareto distribution) could produce instances that are simultaneously difficult for both classical solvers. On these hardest problems, the quantum method outperformed both simulated annealing and CPLEX simultaneously.

In related work that was published recently on arXiv, it should be pointed out, a stronger version of this algorithm was shown to beat a popular classical solver at finding better solutions to tough optimization problems. It didn’t just run faster — it also searched more intelligently, homing in on better answers more efficiently.

Another Step Toward Quantum Value

The results presented in this study do not claim a universal quantum speedup, but they do suggest that targeted quantum algorithms, carefully matched to specific problems and deployed on current hardware, can outperform some of the best classical solvers available today.

This opens the door to near-term applications in optimization-heavy industries such as logistics, materials, and finance — not with hypothetical quantum systems, but with those that exist now.

Writing about the finding on LinkedIn, Jay Gambetta, IBM Fellow and vice president of IBM Quantum, wrote, “This is another step toward practical, scalable quantum optimization with today’s hardware.”

0 Comments